Big Data Can Also Mean Big Errors!

The Sloan Digital Sky Survey back in the year 2000 generated more astronomical data in a few weeks than had been generated by astrophysicists in the entire history of astronomy. The Chile-based Large Synoptic Survey Telescope will generate this quantity of data every five days once it goes online in 2016.

If you think that this type of data can only be generated in space, think again. Wal-Mart handles more than a million transactions per hour and its databases are estimated to be about 167 times larger than all the books in the American Library of Congress. Similarly Facebook has 40 billion+ photographs, and the human genome with 3 billion base pairs — both examples of enormous data sets.

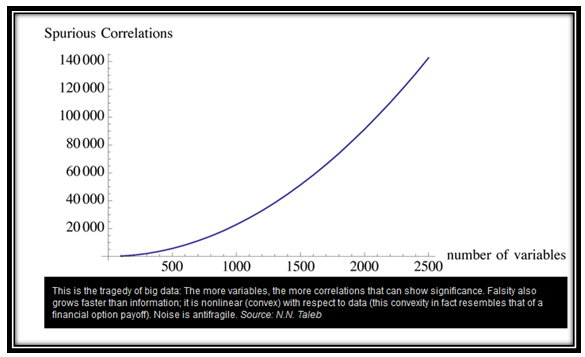

While it is fashionable to say that analysis of big data hold all the possible answers, there are a number of issues that need to be understood. When large amounts of data are analyzed, many correlations emerge, i.e. a situation when A implies B. In such a situation, if A increases, then B increases as well. However, as the number of variables increases, the number of chance correlations increases as well. A chance correlation is just a random coincidence. N.N. Taleb, in his book “Antifragile: Things That Gain from Disorder”, describes how the number of spurious correlations grow as the number of analyzed variables increase.

This, Taleb says, is the problem with big data. The number of spurious results can be larger than the number of accurate results by a factor of as much 8 times!

Should we dismiss big data then? Does it have no value if the spurious results are so many?

Fortunately, the answer is no, but it does point to the pitfalls of using statistics recklessly. With the growing use of big data and its possible correlations, statisticians are suddenly back in demand. I previously discussed a situation where a retail store chain was using its vast databases to determine if a customer was pregnant. Statistics played a big role in that research and even more powerful statistical analysis is now being brought into play. Fortunately, the inherent power of the cloud means that more and more complex analyses are now possible, if only one knows how to do it.

What this means is that statistics as a career option is becoming increasingly popular too. Cloud computing and big data have ensured that the nerd who really understands statistics will be able to command his or her own price. The CIO in companies is now becoming an integral part of strategy meetings. Since this analysis is such an integral part of company strategy, it is critical that the cloud infrastructure companies use is robust and reliable. For an example, check out GMO Cloud’s High Availability page. CIOs are careful to choose great statisticians and great cloud service providers. Both are essential for a good night’s sleep!

Be Part of Our Cloud Conversation

Our articles are written to provide you with tools and information to meet your IT and cloud solution needs. Join us on Facebook and Twitter.

About the Guest Author:

Sanjay Srivastava has been active in computing infrastructure and has participated in major projects on cloud computing, networking, VoIP and in creation of applications running over distributed databases. Due to a military background, his focus has always been on stability and availability of infrastructure. Sanjay was the Director of Information Technology in a major enterprise and managed the transition from legacy software to fully networked operations using private cloud infrastructure. He now writes extensively on cloud computing and networking and is about to move to his farm in Central India where he plans to use cloud computing and modern technology to improve the lives of rural folk in India.

Sanjay Srivastava has been active in computing infrastructure and has participated in major projects on cloud computing, networking, VoIP and in creation of applications running over distributed databases. Due to a military background, his focus has always been on stability and availability of infrastructure. Sanjay was the Director of Information Technology in a major enterprise and managed the transition from legacy software to fully networked operations using private cloud infrastructure. He now writes extensively on cloud computing and networking and is about to move to his farm in Central India where he plans to use cloud computing and modern technology to improve the lives of rural folk in India.