How Cloud Computing is Changing Research Methods

How has cloud computing changed the lives of researchers and scholars? This is an interesting subject, and I’ve come across some interesting findings on the matter.

The cloud has impacted research in several ways, but the most profound one has been in the domain of how data is stored and handled. There are three fundamental changes occurring which are going to change the way researchers work.

1. Big data

Previously, when researchers collected data, it was just a sample. Because (a) they did not have the means of collecting large volumes of data and (b) they could not handle large volumes of data.

Cloud computing and the advent of big data has changed all that. Suddenly the researcher is able to work with enormous quantities of data, effectively attaining freedom from sampling. Using a small quantity of data and relying on it to predict the outcome of the entire population had major shortcomings and naturally led to compromises and inaccuracies.

Cloud computing and big data allows researchers to overcome this limitation. Naturally, the insights that are possible with this kind of data are far better compared to those from a small sample set of data.

2. Big picture

The second major change lies in the reduced need for exact measurements. All of us familiar with database queries know that the query matches the value you are looking for: (SELECT * from EMPLOYEE WHERE AGE = 25). Since data was limited, you looked for greater accuracy to get better results.

Cloud computing and big data allows you a different luxury. You don’t need accuracy anymore! Take the example of a small candy shop. Every night at closing time the owner knows exactly how much he has sold and what his profit is. This is small data. The values are very accurately known. Upscale this to the sales data of Wal-Mart. Do you think they have similar data every night? Of course not. Even when their accounts close, do you think they are 100% accurate down to the last dime? Surely not. Does it really matter – no it does not. How does a dollar there or here make a difference? It is the macro insights that are really important.

Prediction from patterns

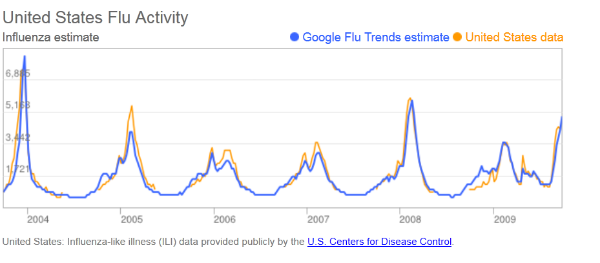

The third change that big data has brought about in research is to stop searching for causes to an understanding of patterns. Traditional research looks for the cause of an event or a phenomenon in the hopes of predicting future events. However with cloud computing and using big data, you need not look for the cause of an event, you merely need to understand the pattern. Today, stock market analysts look at millions of tweets to try and figure out the mood of a population and thereby predict if the stock market will rise or fall.

Will these three changes have a major impact on research? Will we see more patterns of research emerge? Time will tell. But one thing is sure, change is just round the corner.

Be Part of Our Cloud Conversation

Our articles are written to provide you with tools and information to meet your IT and cloud solution needs. Join us on Facebook and Twitter.

About the Guest Author:

Sanjay Srivastava has been active in computing infrastructure and has participated in major projects on cloud computing, networking, VoIP and in creation of applications running over distributed databases. Due to a military background, his focus has always been on stability and availability of infrastructure. Sanjay was the Director of Information Technology in a major enterprise and managed the transition from legacy software to fully networked operations using private cloud infrastructure. He now writes extensively on cloud computing and networking and is about to move to his farm in Central India where he plans to use cloud computing and modern technology to improve the lives of rural folk in India.

Sanjay Srivastava has been active in computing infrastructure and has participated in major projects on cloud computing, networking, VoIP and in creation of applications running over distributed databases. Due to a military background, his focus has always been on stability and availability of infrastructure. Sanjay was the Director of Information Technology in a major enterprise and managed the transition from legacy software to fully networked operations using private cloud infrastructure. He now writes extensively on cloud computing and networking and is about to move to his farm in Central India where he plans to use cloud computing and modern technology to improve the lives of rural folk in India.

Sankarambadi Srinivasan, ‘Srini’, is a maverick writer, technopreneur, geek and online marketing enthusiast rolled into one. He began his career as a Naval weapon specialist. Later, he sold his maiden venture and became head of an offshore Database administration company in Mumbai. He moved on as Chief Technology Officer of one of the largest online entities, where he led consolidation of 300 online servers and introduced several Web 2.0 initiatives. He holds a Master’s degree in Electronics and Telecommunication.

Sankarambadi Srinivasan, ‘Srini’, is a maverick writer, technopreneur, geek and online marketing enthusiast rolled into one. He began his career as a Naval weapon specialist. Later, he sold his maiden venture and became head of an offshore Database administration company in Mumbai. He moved on as Chief Technology Officer of one of the largest online entities, where he led consolidation of 300 online servers and introduced several Web 2.0 initiatives. He holds a Master’s degree in Electronics and Telecommunication.